When Apple first introduced Apple Intelligence on iOS 18.4, iPadOS 18.4, and macos Sequoia 15.4, I was cautiously curious. These days, it feels like every platform is rolling out some form of AI. Some features are genuinely helpful, while others feel like tech for tech’s sake. But after a full week of testing Apple’s version on my iPhone, iPad and MacBook, I can honestly say it feels different. It’s subtle, actually useful, and blends naturally into the way I already use my devices.

Here’s what stood out. I’ve put together a short video that walks through all the new features across iPhone, iPad and MacBook. Check it out!

Cleaner Photos

One of the first things I tried was the new Clean Up tool in Photos. I had this picture of my coffee from a weekend café visit. The lighting was great, the coffee looked perfect, but there were a few people in the background who distracted from the shot.

With just a tap, I selected them, and Clean Up wiped them out of the frame smoothly. No weird patches, no awkward edges.

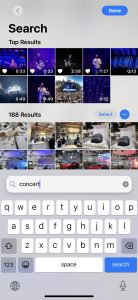

Smarter Searches In Photos

Next, I tested out the improved photo search by typing just one word: concerts.

Instantly, it pulled together the concerts I had captured over the years, even finding moments I had forgotten I recorded. It works on videos too! No more endless scrolling through the photo roll to find that one gig.

Endless Genmojis

Then I got into Genmoji to create new emojis when I text. It turned out to be weirdly addictive. I was craving chicken rice, and typed in “girl eating chicken rice” and watched as it generated a range of emoji-like characters capturing that exact vibe.

I could swipe through the versions, tweak them, and send them out in chats. It’s surprisingly expressive and a lot more fun than standard emojis.

Siri support for English (Singapore) That Gets Me

The biggest surprise, though, came when I asked, “Got chicken rice near me?” For the first time, Siri understood me. With Siri support for English (Singapore), Siri feels more relatable and far more useful. What impressed me was how well it handled changes in my request. I could stumble mid-sentence or switch things up like saying, “Siri, set a timer for 5 mins… no 10 mins.” and Siri would still follow along without missing a beat.

I also discovered a new way to use Siri without even speaking. In quieter places like meetings or libraries, I now use the Type to Siri feature. By double-tapping the bottom of the iPhone screen, or pressing the Command key twice on my iPad or Mac keyboard, I can bring up a Siri keyboard.

Visual Intelligence With Camera Control

Another feature that impressed me was Visual Intelligence with Camera Control, available on the iPhone 16 lineup. I didn’t realise how handy it would be until I started using it in my daily routine.

One night, I pointed my camera at a new humidifier I had just found. Not only did it detect the model, but it instantly pulled up usage instructions. I was also able to ask Chatgpt, which is integrated directly into the system, for a list of calming essential oils to pair with it.

Beyond that, Visual Intelligence has been useful in a bunch of other everyday situations. It can scan and summarise text, which is great for quickly understanding flyers or documents. It can also read text out loud, translate signs and menus, and detect phone numbers or email addresses. If I come across a product or item I like, I offer to search Google for where to buy it.

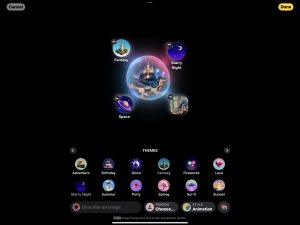

Image Playground, Especially for Visual Thinkers

I mostly use my iPad for note-taking and creating, so the new Image Playground feature clicked with me.

What I enjoyed was how flexible it was, I could create anything!. I decided to try creating a garden tea party scene using an existing picture I took, and added prompts like spring, sunset. The result looked exactly like the vibe I had imagined. Soft colours, golden light, and a dreamy, outdoor setting with flower garlands and teacups under fairy lights.

With a different prompt like Starry Night, the Apple Intelligence can catch on and create another beautiful image.

I also played with creating images based on someone’s appearance. I chose the appearance of a friend and added prompts like hiker and rainforest, and the system generated a visual of her trekking through lush greenery. It honestly felt like my imagination had an engine behind it.

Image Wand To Create Illustrations from Sketches

As I was doing up a poster on astronomy, I wanted to add a nice illustration in. First, I drew a rough sketch.

Then, using the Image Wand, I circled the rough drawing in my notes, added a simple prompt, and it turned into a clean, relevant visual.

It will even provide suggestions based on the surrounding content. That kind of contextual help makes it so efficient and seamless.

I could select animation, sketch or illustration styles depending on the mood I was going for. Each style brought a slightly different perspective, and I loved being able to experiment with all three.

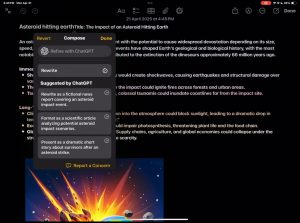

Writing Tools

While working on that topic, I also tapped into Chatgpt, which is now integrated directly into Apple Intelligence. I asked it for information on real-life asteroid impacts and how they’re studied.

The response was incredibly detailed, so I used the built-in Writing Tools to summarise it for my notes. In just a few taps, I had a clean, concise summary I could drop straight into my document without losing any key points.

Writing is a huge part of my day, and the new Writing Tools built into macos Sequoia have made things a lot easier.

I can highlight any paragraph and get suggestions in different tones like professional, friendly or even poetic. Proofreading helps clean up awkward phrasing, and Summarise is perfect for breaking down long articles and PDFS.

Simpler Mail

In Mail, everything feels more efficient now. Important emails show up at the top, summaries replace the usual opening lines, and smart replies suggest answers to specific questions.

Most of the time, I just pick a suggestion, tweak it slightly and hit send.

What About Privacy?

Almost all of this runs directly on the device, which is a big plus for me. For more complex tasks, Apple uses something called Private Cloud Compute. The key difference is that your data is never stored or shared, even with Apple. Security experts can even audit the code to make sure Apple keeps that promise.

Final Thoughts

Apple Intelligence isn’t trying to be flashy. It doesn’t overwhelm you with features. It simply makes everyday tasks easier. Most importantly, it feels personal. It understands how I speak, what I care about and how I work. That’s not something I’ve experienced with other AI tools, and honestly, it’s the main reason I’ll keep using it.

Apple Intelligence is available in beta on all iPhone 16 models, iPhone 15 Pro, iPhone 15 Pro Max, iPad mini (A17 Pro), and iPad and Mac models with M1 and later.

To get full features on Apple Intelligence, update your iOS devices to iOS 18.4, iPadOS 18.4, and macOS Sequoia 15.4.