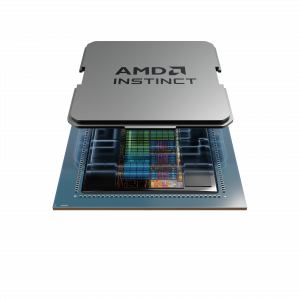

AMD Instinct MI300 Series: A New Era in Computing

The AMD Instinct MI300 Series accelerators represent AMD’s most advanced technologies. According to AMD’s President Victor Peng, these accelerators are expected to play a significant role in large-scale cloud and enterprise deployments. The series benefits from AMD’s holistic approach to hardware and software, boosting the capabilities of cloud providers, OEMs, and ODMs to develop AI-powered solutions.

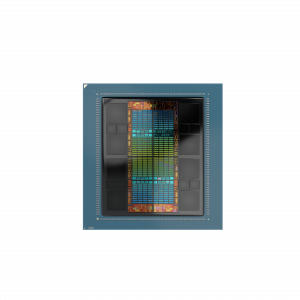

The Power of AMD Instinct MI300X

The AMD Instinct MI300X accelerators, built on the new AMD CDNA 3 architecture, deliver significant improvements over their predecessors, the MI250X accelerators. These include a nearly 40% increase in compute units, 1.5x more memory capacity, and 1.7x more peak theoretical memory bandwidth. They feature 192 GB of HBM3 memory and 5.3 TB/s peak memory bandwidth, making them ideal for complex AI workloads.

Compared to Nvidia’s H100 HGX, the AMD Instinct Platform offers up to 1.6x higher throughput in LLM inference and is the only option capable of running inference for a 70B parameter model on a single accelerator.

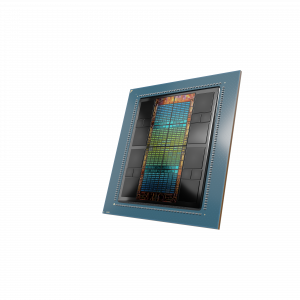

AMD Instinct MI300A: A Breakthrough in Data Center APUs

The AMD Instinct MI300A APU is the world’s first data centre APU designed for HPC and AI, combining AMD CDNA 3 GPU cores, “Zen 4” CPU cores, and 128GB of HBM3 memory. It delivers approximately 1.9x the performance-per-watt on FP32 HPC and AI workloads compared to the previous generation MI250X. This APU provides unified memory and cache resources, offering a highly efficient platform for the most demanding HPC and AI workloads.

ROCm Software and Ecosystem Partners

AMD also announced the AMD ROCm 6 open software platform, enhancing AI acceleration performance significantly. The company’s commitment to open-source AI software is evident through its contributions to the community. ROCm 6 introduces several new features for generative AI and supports widely used open-source AI software models and frameworks. AMD’s strategic ecosystem partnerships, including collaborations with Nod.AI, Mipsology, Lamini, and MosaicML, further reinforce its position in the AI and HPC landscapes.

You can also learn more about AMD’s announcements at AMD.com, on the AMD YouTube channel.